NVIDIA Generative AI Technology Used by Google DeepMind and Google Research Teams Now Optimized and Available to Google Cloud Customers WorldwideSAN FRANCISCO, Aug. 29, 2023 (GLOBE NEWSWIRE) …

Month: August 2023

Janice K. Lee, a.k.a Janice.Journal — the subject of this week’s In the NVIDIA Studio installment — is a TikTok sensation using AI to accelerate her creative process, find inspiration and automate repetitive tasks.

The ability to detect objects in the visual world is crucial for computer vision and machine intelligence, enabling applications like adaptive autonomous agents and versatile shopping systems. However, modern object detectors are limited by the manual annotations of their training data, resulting in a vocabulary size significantly smaller than the vast array of objects encountered in reality. To overcome this, the open-vocabulary detection task (OVD) has emerged, utilizing image-text pairs for training and incorporating new category names at test time by associating them with the image content. By treating categories as text embeddings, open-vocabulary detectors can predict a wide range of unseen objects. Various techniques such as image-text pre-training, knowledge distillation, pseudo labeling, and frozen models, often employing convolutional neural network (CNN) backbones, have been proposed. With the growing popularity of vision transformers (ViTs), it is important to explore their potential for building proficient open-vocabulary detectors.

The existing approaches assume the availability of pre-trained vision-language models (VLMs) and focus on fine-tuning or distillation from these models to address the disparity between image-level pre-training and object-level fine-tuning. However, as VLMs are primarily designed for image-level tasks like classification and retrieval, they do not fully leverage the concept of objects or regions during the pre-training phase. Thus, it could be beneficial for open-vocabulary detection if we build locality information into the image-text pre-training.

In “RO-ViT: Region-Aware Pretraining for Open-Vocabulary Object Detection with Vision Transformers”, presented at CVPR 2023, we introduce a simple method to pre-train vision transformers in a region-aware manner to improve open-vocabulary detection. In vision transformers, positional embeddings are added to image patches to encode information about the spatial position of each patch within the image. Standard pre-training typically uses full-image positional embeddings, which does not generalize well to detection tasks. Thus, we propose a new positional embedding scheme, called “cropped positional embedding”, that better aligns with the use of region crops in detection fine-tuning. In addition, we replace the softmax cross entropy loss with focal loss in contrastive image-text learning, allowing us to learn from more challenging and informative examples. Finally, we leverage recent advances in novel object proposals to enhance open-vocabulary detection fine-tuning, which is motivated by the observation that existing methods often miss novel objects during the proposal stage due to overfitting to foreground categories. We are also releasing the code here.

Region-aware image-text pre-training

Existing VLMs are trained to match an image as a whole to a text description. However, we observe there is a mismatch between the way the positional embeddings are used in the existing contrastive pre-training approaches and open-vocabulary detection. The positional embeddings are important to transformers as they provide the information of where each element in the set comes from. This information is often useful for downstream recognition and localization tasks. Pre-training approaches typically apply full-image positional embeddings during training, and use the same positional embeddings for downstream tasks, e.g., zero-shot recognition. However, the recognition occurs at region-level for open-vocabulary detection fine-tuning, which requires the full-image positional embeddings to generalize to regions that they never see during the pre-training.

To address this, we propose cropped positional embeddings (CPE). With CPE, we upsample positional embeddings from the image size typical for pre-training, e.g., 224×224 pixels, to that typical for detection tasks, e.g., 1024×1024 pixels. Then we randomly crop and resize a region, and use it as the image-level positional embeddings during pre-training. The position, scale, and aspect ratio of the crop is randomly sampled. Intuitively, this causes the model to view an image not as a full image in itself, but as a region crop from some larger unknown image. This better matches the downstream use case of detection where recognition occurs at region- rather than image-level.

We also find it beneficial to learn from hard examples with a focal loss. Focal loss enables finer control over how hard examples are weighted than what the softmax cross entropy loss can provide. We adopt the focal loss and replace it with the softmax cross entropy loss in both image-to-text and text-to-image losses. Both CPE and focal loss introduce no extra parameters and minimal computation costs.

Open-vocabulary detector fine-tuning

An open-vocabulary detector is trained with the detection labels of ‘base’ categories, but needs to detect the union of ‘base’ and ‘novel’ (unlabeled) categories at test time. Despite the backbone features pre-trained from the vast open-vocabulary data, the added detector layers (neck and heads) are newly trained with the downstream detection dataset. Existing approaches often miss novel/unlabeled objects in the object proposal stage because the proposals tend to classify them as background. To remedy this, we leverage recent advances in a novel object proposal method and adopt the localization quality-based objectness (i.e., centerness score) instead of object-or-not binary classification score, which is combined with the detection score. During training, we compute the detection scores for each detected region as the cosine similarity between the region’s embedding (computed via RoI-Align operation) and the text embeddings of the base categories. At test time, we append the text embeddings of novel categories, and the detection score is now computed with the union of the base and novel categories.

|

| The pre-trained ViT backbone is transferred to the downstream open-vocabulary detection by replacing the global average pooling with detector heads. The RoI-Align embeddings are matched with the cached category embeddings to obtain the VLM score, which is combined with the detection score into the open-vocabulary detection score. |

Results

We evaluate RO-ViT on the LVIS open-vocabulary detection benchmark. At the system-level, our best model achieves 33.6 box average precision on rare categories (APr) and 32.1 mask APr, which outperforms the best existing ViT-based approach OWL-ViT by 8.0 APr and the best CNN-based approach ViLD-Ens by 5.8 mask APr. It also exceeds the performance of many other approaches based on knowledge distillation, pre-training, or joint training with weak supervision.

|

| RO-ViT outperforms both the state-of-the-art (SOTA) ViT-based and CNN-based methods on LVIS open-vocabulary detection benchmark. We show mask AP on rare categories (APr) , except for SOTA ViT-based (OwL-ViT) where we show box AP. |

Apart from evaluating region-level representation through open-vocabulary detection, we evaluate the image-level representation of RO-ViT in image-text retrieval through the MS-COCO and Flickr30K benchmarks. Our model with 303M ViT outperforms the state-of-the-art CoCa model with 1B ViT on MS COCO, and is on par on Flickr30K. This shows that our pre-training method not only improves the region-level representation but also the global image-level representation for retrieval.

|

| We show zero-shot image-text retrieval on MS COCO and Flickr30K benchmarks, and compare with dual-encoder methods. We report recall@1 (top-1 recall) on image-to-text (I2T) and text-to-image (T2I) retrieval tasks. RO-ViT outperforms the state-of-the-art CoCa with the same backbone. |

Visualization of positional embeddings

We visualize and compare the learned positional embeddings of RO-ViT with the baseline. Each tile is the cosine similarity between positional embeddings of one patch and all other patches. For example, the tile in the top-left corner (marked in red) visualizes the similarity between the positional embedding of the location (row=1, column=1) and those positional embeddings of all other locations in 2D. The brightness of the patch indicates how close the learned positional embeddings of different locations are. RO-ViT forms more distinct clusters at different patch locations showing symmetrical global patterns around the center patch.

Conclusion

We present RO-ViT, a contrastive image-text pre-training framework to bridge the gap between image-level pre-training and open-vocabulary detection fine-tuning. Our methods are simple, scalable, and easy to apply to any contrastive backbones with minimal computation overhead and no increase in parameters. RO-ViT achieves the state-of-the-art on LVIS open-vocabulary detection benchmark and on the image-text retrieval benchmarks, showing the learned representation is not only beneficial at region-level but also highly effective at the image-level. We hope this study can help the research on open-vocabulary detection from the perspective of image-text pre-training which can benefit both region-level and image-level tasks.

Acknowledgements

Dahun Kim, Anelia Angelova, and Weicheng Kuo conducted this work and are now at Google DeepMind. We would like to thank our colleagues at Google Research for their advice and helpful discussions.

Companies are discovering how accelerated computing can boost their bottom lines while making a positive impact on the planet. The NVIDIA RAPIDS Accelerator for Apache Spark, software that speeds data analytics, not only raises performance and lowers costs, it increases energy efficiency, too. That means it can help companies meet goals for net-zero emissions of Read article >

Google’s Responsible AI research is built on a foundation of collaboration — between teams with diverse backgrounds and expertise, between researchers and product developers, and ultimately with the community at large. The Perception Fairness team drives progress by combining deep subject-matter expertise in both computer vision and machine learning (ML) fairness with direct connections to the researchers building the perception systems that power products across Google and beyond. Together, we are working to intentionally design our systems to be inclusive from the ground up, guided by Google’s AI Principles.

|

| Perception Fairness research spans the design, development, and deployment of advanced multimodal models including the latest foundation and generative models powering Google’s products. |

Our team’s mission is to advance the frontiers of fairness and inclusion in multimodal ML systems, especially related to foundation models and generative AI. This encompasses core technology components including classification, localization, captioning, retrieval, visual question answering, text-to-image or text-to-video generation, and generative image and video editing. We believe that fairness and inclusion can and should be top-line performance goals for these applications. Our research is focused on unlocking novel analyses and mitigations that enable us to proactively design for these objectives throughout the development cycle. We answer core questions, such as: How can we use ML to responsibly and faithfully model human perception of demographic, cultural, and social identities in order to promote fairness and inclusion? What kinds of system biases (e.g., underperforming on images of people with certain skin tones) can we measure and how can we use these metrics to design better algorithms? How can we build more inclusive algorithms and systems and react quickly when failures occur?

Measuring representation of people in media

ML systems that can edit, curate or create images or videos can affect anyone exposed to their outputs, shaping or reinforcing the beliefs of viewers around the world. Research to reduce representational harms, such as reinforcing stereotypes or denigrating or erasing groups of people, requires a deep understanding of both the content and the societal context. It hinges on how different observers perceive themselves, their communities, or how others are represented. There’s considerable debate in the field regarding which social categories should be studied with computational tools and how to do so responsibly. Our research focuses on working toward scalable solutions that are informed by sociology and social psychology, are aligned with human perception, embrace the subjective nature of the problem, and enable nuanced measurement and mitigation. One example is our research on differences in human perception and annotation of skin tone in images using the Monk Skin Tone scale.

Our tools are also used to study representation in large-scale content collections. Through our Media Understanding for Social Exploration (MUSE) project, we’ve partnered with academic researchers, nonprofit organizations, and major consumer brands to understand patterns in mainstream media and advertising content. We first published this work in 2017, with a co-authored study analyzing gender equity in Hollywood movies. Since then, we’ve increased the scale and depth of our analyses. In 2019, we released findings based on over 2.7 million YouTube advertisements. In the latest study, we examine representation across intersections of perceived gender presentation, perceived age, and skin tone in over twelve years of popular U.S. television shows. These studies provide insights for content creators and advertisers and further inform our own research.

|

| An illustration (not actual data) of computational signals that can be analyzed at scale to reveal representational patterns in media collections. [Video Collection / Getty Images] |

Moving forward, we’re expanding the ML fairness concepts on which we focus and the domains in which they are responsibly applied. Looking beyond photorealistic images of people, we are working to develop tools that model the representation of communities and cultures in illustrations, abstract depictions of humanoid characters, and even images with no people in them at all. Finally, we need to reason about not just who is depicted, but how they are portrayed — what narrative is communicated through the surrounding image content, the accompanying text, and the broader cultural context.

Analyzing bias properties of perceptual systems

Building advanced ML systems is complex, with multiple stakeholders informing various criteria that decide product behavior. Overall quality has historically been defined and measured using summary statistics (like overall accuracy) over a test dataset as a proxy for user experience. But not all users experience products in the same way.

Perception Fairness enables practical measurement of nuanced system behavior beyond summary statistics, and makes these metrics core to the system quality that directly informs product behaviors and launch decisions. This is often much harder than it seems. Distilling complex bias issues (e.g., disparities in performance across intersectional subgroups or instances of stereotype reinforcement) to a small number of metrics without losing important nuance is extremely challenging. Another challenge is balancing the interplay between fairness metrics and other product metrics (e.g., user satisfaction, accuracy, latency), which are often phrased as conflicting despite being compatible. It is common for researchers to describe their work as optimizing an “accuracy-fairness” tradeoff when in reality widespread user satisfaction is aligned with meeting fairness and inclusion objectives.

|

| We built and released the MIAP dataset as part of Open Images, leveraging our research on perception of socially relevant concepts and detection of biased behavior in complex systems to create a resource that furthers ML fairness research in computer vision. Original photo credits — left: Boston Public Library; middle: jen robinson; right: Garin Fons; all used with permission under the CC- BY 2.0 license. |

To these ends, our team focuses on two broad research directions. First, democratizing access to well-understood and widely-applicable fairness analysis tooling, engaging partner organizations in adopting them into product workflows, and informing leadership across the company in interpreting results. This work includes developing broad benchmarks, curating widely-useful high-quality test datasets and tooling centered around techniques such as sliced analysis and counterfactual testing — often building on the core representation signals work described earlier. Second, advancing novel approaches towards fairness analytics — including partnering with product efforts that may result in breakthrough findings or inform launch strategy.

Advancing AI responsibly

Our work does not stop with analyzing model behavior. Rather, we use this as a jumping-off point for identifying algorithmic improvements in collaboration with other researchers and engineers on product teams. Over the past year we’ve launched upgraded components that power Search and Memories features in Google Photos, leading to more consistent performance and drastically improving robustness through added layers that keep mistakes from cascading through the system. We are working on improving ranking algorithms in Google Images to diversify representation. We updated algorithms that may reinforce historical stereotypes, using additional signals responsibly, such that it’s more likely for everyone to see themselves reflected in Search results and find what they’re looking for.

This work naturally carries over to the world of generative AI, where models can create collections of images or videos seeded from image and text prompts and can answer questions about images and videos. We’re excited about the potential of these technologies to deliver new experiences to users and as tools to further our own research. To enable this, we’re collaborating across the research and responsible AI communities to develop guardrails that mitigate failure modes. We’re leveraging our tools for understanding representation to power scalable benchmarks that can be combined with human feedback, and investing in research from pre-training through deployment to steer the models to generate higher quality, more inclusive, and more controllable output. We want these models to inspire people, producing diverse outputs, translating concepts without relying on tropes or stereotypes, and providing consistent behaviors and responses across counterfactual variations of prompts.

Opportunities and ongoing work

Despite over a decade of focused work, the field of perception fairness technologies still seems like a nascent and fast-growing space, rife with opportunities for breakthrough techniques. We continue to see opportunities to contribute technical advances backed by interdisciplinary scholarship. The gap between what we can measure in images versus the underlying aspects of human identity and expression is large — closing this gap will require increasingly complex media analytics solutions. Data metrics that indicate true representation, situated in the appropriate context and heeding a diversity of viewpoints, remains an open challenge for us. Can we reach a point where we can reliably identify depictions of nuanced stereotypes, continually update them to reflect an ever-changing society, and discern situations in which they could be offensive? Algorithmic advances driven by human feedback point a promising path forward.

Recent focus on AI safety and ethics in the context of modern large model development has spurred new ways of thinking about measuring systemic biases. We are exploring multiple avenues to use these models — along with recent developments in concept-based explainability methods, causal inference methods, and cutting-edge UX research — to quantify and minimize undesired biased behaviors. We look forward to tackling the challenges ahead and developing technology that is built for everybody.

Acknowledgements

We would like to thank every member of the Perception Fairness team, and all of our collaborators.

Since 2018, NVIDIA DLSS has leveraged AI to enable gamers and creators to increase performance and crank up their quality. Over time, this solution has evolved…

Since 2018, NVIDIA DLSS has leveraged AI to enable gamers and creators to increase performance and crank up their quality. Over time, this solution has evolved…

Since 2018, NVIDIA DLSS has leveraged AI to enable gamers and creators to increase performance and crank up their quality. Over time, this solution has evolved to include groundbreaking advancements in super resolution and frame generation.

Now, the AI neural rendering technology takes the next step forward with DLSS 3.5. This update includes an important new feature called Ray Reconstruction.

Ray Reconstruction is a new neural network for all GeForce RTX GPUs that further improves the image quality of ray-traced images. Trained on 5x more data than DLSS 3, DLSS 3.5 replaces hand-tuned denoisers with an NVIDIA supercomputer-trained AI network that generates higher quality pixels in between sampled rays.

Cyberpunk 2077, Cyberpunk 2077: Phantom Liberty, Alan Wake 2, and Portal with RTX, all coming this fall, will include Ray Reconstruction.

DLSS 3.5 also adds Auto Scene Change Detection to Frame Generation. This feature aims to automatically prevent Frame Generation from producing difficult-to-create frames between a substantial scene change. It does this by analyzing the in-game camera orientation on every DLSS Frame Generation frame pair.

Auto Scene Change Detection eases integration of new DLSS 3 titles, is backwards compatible with all DLSS 3 integrations, and supports all rendering platforms. In SDK build variants, the scene change detector provides onscreen aids to indicate when a scene change is detected so the developer can pass in the reset flag.

With these new features, you can achieve even better results with ray tracing, and more effectively manage fast scene changes.

Integration into a custom engine is developer-friendly with the Streamline 2.2 SDK, an open-source cross-IHV framework. Simply identify which resources are required for the decided Streamline plug-in. In the game’s rendering pipeline, you can then trigger when to execute the plug-in.

For Unreal Engine titles, simply install the plug-in into your project and most of the work is done. For a step-by-step guide to integration, see How to Successfully Integrate NVIDIA DLSS 3.

Ray Reconstruction will soon be available to all game developers—sign up to be notified. Auto Scene Change Detection is available now in DLSS 3.5 through the Streamline 2.2 SDK and Unreal Engine plug-in.

A full-scale error-corrected quantum computer will be able to solve some problems that are impossible for classical computers, but building such a device is a huge endeavor. We are proud of the milestones that we have achieved toward a fully error-corrected quantum computer, but that large-scale computer is still some number of years away. Meanwhile, we are using our current noisy quantum processors as flexible platforms for quantum experiments.

In contrast to an error-corrected quantum computer, experiments in noisy quantum processors are currently limited to a few thousand quantum operations or gates, before noise degrades the quantum state. In 2019 we implemented a specific computational task called random circuit sampling on our quantum processor and showed for the first time that it outperformed state-of-the-art classical supercomputing.

Although they have not yet reached beyond-classical capabilities, we have also used our processors to observe novel physical phenomena, such as time crystals and Majorana edge modes, and have made new experimental discoveries, such as robust bound states of interacting photons and the noise-resilience of Majorana edge modes of Floquet evolutions.

We expect that even in this intermediate, noisy regime, we will find applications for the quantum processors in which useful quantum experiments can be performed much faster than can be calculated on classical supercomputers — we call these “computational applications” of the quantum processors. No one has yet demonstrated such a beyond-classical computational application. So as we aim to achieve this milestone, the question is: What is the best way to compare a quantum experiment run on such a quantum processor to the computational cost of a classical application?

We already know how to compare an error-corrected quantum algorithm to a classical algorithm. In that case, the field of computational complexity tells us that we can compare their respective computational costs — that is, the number of operations required to accomplish the task. But with our current experimental quantum processors, the situation is not so well defined.

In “Effective quantum volume, fidelity and computational cost of noisy quantum processing experiments”, we provide a framework for measuring the computational cost of a quantum experiment, introducing the experiment’s “effective quantum volume”, which is the number of quantum operations or gates that contribute to a measurement outcome. We apply this framework to evaluate the computational cost of three recent experiments: our random circuit sampling experiment, our experiment measuring quantities known as “out of time order correlators” (OTOCs), and a recent experiment on a Floquet evolution related to the Ising model. We are particularly excited about OTOCs because they provide a direct way to experimentally measure the effective quantum volume of a circuit (a sequence of quantum gates or operations), which is itself a computationally difficult task for a classical computer to estimate precisely. OTOCs are also important in nuclear magnetic resonance and electron spin resonance spectroscopy. Therefore, we believe that OTOC experiments are a promising candidate for a first-ever computational application of quantum processors.

|

| Plot of computational cost and impact of some recent quantum experiments. While some (e.g., QC-QMC 2022) have had high impact and others (e.g., RCS 2023) have had high computational cost, none have yet been both useful and hard enough to be considered a “computational application.” We hypothesize that our future OTOC experiment could be the first to pass this threshold. Other experiments plotted are referenced in the text. |

Random circuit sampling: Evaluating the computational cost of a noisy circuit

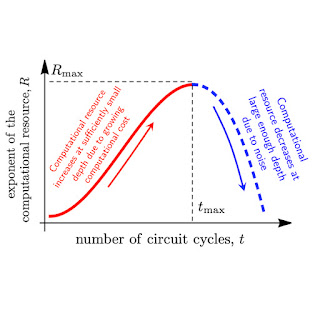

When it comes to running a quantum circuit on a noisy quantum processor, there are two competing considerations. On one hand, we aim to do something that is difficult to achieve classically. The computational cost — the number of operations required to accomplish the task on a classical computer — depends on the quantum circuit’s effective quantum volume: the larger the volume, the higher the computational cost, and the more a quantum processor can outperform a classical one.

But on the other hand, on a noisy processor, each quantum gate can introduce an error to the calculation. The more operations, the higher the error, and the lower the fidelity of the quantum circuit in measuring a quantity of interest. Under this consideration, we might prefer simpler circuits with a smaller effective volume, but these are easily simulated by classical computers. The balance of these competing considerations, which we want to maximize, is called the “computational resource”, shown below.

We can see how these competing considerations play out in a simple “hello world” program for quantum processors, known as random circuit sampling (RCS), which was the first demonstration of a quantum processor outperforming a classical computer. Any error in any gate is likely to make this experiment fail. Inevitably, this is a hard experiment to achieve with significant fidelity, and thus it also serves as a benchmark of system fidelity. But it also corresponds to the highest known computational cost achievable by a quantum processor. We recently reported the most powerful RCS experiment performed to date, with a low measured experimental fidelity of 1.7×10-3, and a high theoretical computational cost of ~1023. These quantum circuits had 700 two-qubit gates. We estimate that this experiment would take ~47 years to simulate in the world’s largest supercomputer. While this checks one of the two boxes needed for a computational application — it outperforms a classical supercomputer — it is not a particularly useful application per se.

OTOCs and Floquet evolution: The effective quantum volume of a local observable

There are many open questions in quantum many-body physics that are classically intractable, so running some of these experiments on our quantum processor has great potential. We typically think of these experiments a bit differently than we do the RCS experiment. Rather than measuring the quantum state of all qubits at the end of the experiment, we are usually concerned with more specific, local physical observables. Because not every operation in the circuit necessarily impacts the observable, a local observable’s effective quantum volume might be smaller than that of the full circuit needed to run the experiment.

We can understand this by applying the concept of a light cone from relativity, which determines which events in space-time can be causally connected: some events cannot possibly influence one another because information takes time to propagate between them. We say that two such events are outside their respective light cones. In a quantum experiment, we replace the light cone with something called a “butterfly cone,” where the growth of the cone is determined by the butterfly speed — the speed with which information spreads throughout the system. (This speed is characterized by measuring OTOCs, discussed later.) The effective quantum volume of a local observable is essentially the volume of the butterfly cone, including only the quantum operations that are causally connected to the observable. So, the faster information spreads in a system, the larger the effective volume and therefore the harder it is to simulate classically.

We apply this framework to a recent experiment implementing a so-called Floquet Ising model, a physical model related to the time crystal and Majorana experiments. From the data of this experiment, one can directly estimate an effective fidelity of 0.37 for the largest circuits. With the measured gate error rate of ~1%, this gives an estimated effective volume of ~100. This is much smaller than the light cone, which included two thousand gates on 127 qubits. So, the butterfly velocity of this experiment is quite small. Indeed, we argue that the effective volume covers only ~28 qubits, not 127, using numerical simulations that obtain a larger precision than the experiment. This small effective volume has also been corroborated with the OTOC technique. Although this was a deep circuit, the estimated computational cost is 5×1011, almost one trillion times less than the recent RCS experiment. Correspondingly, this experiment can be simulated in less than a second per data point on a single A100 GPU. So, while this is certainly a useful application, it does not fulfill the second requirement of a computational application: substantially outperforming a classical simulation.

Information scrambling experiments with OTOCs are a promising avenue for a computational application. OTOCs can tell us important physical information about a system, such as the butterfly velocity, which is critical for precisely measuring the effective quantum volume of a circuit. OTOC experiments with fast entangling gates offer a potential path for a first beyond-classical demonstration of a computational application with a quantum processor. Indeed, in our experiment from 2021 we achieved an effective fidelity of Feff ~ 0.06 with an experimental signal-to-noise ratio of ~1, corresponding to an effective volume of ~250 gates and a computational cost of 2×1012.

While these early OTOC experiments are not sufficiently complex to outperform classical simulations, there is a deep physical reason why OTOC experiments are good candidates for the first demonstration of a computational application. Most of the interesting quantum phenomena accessible to near-term quantum processors that are hard to simulate classically correspond to a quantum circuit exploring many, many quantum energy levels. Such evolutions are typically chaotic and standard time-order correlators (TOC) decay very quickly to a purely random average in this regime. There is no experimental signal left. This does not happen for OTOC measurements, which allows us to grow complexity at will, only limited by the error per gate. We anticipate that a reduction of the error rate by half would double the computational cost, pushing this experiment to the beyond-classical regime.

Conclusion

Using the effective quantum volume framework we have developed, we have determined the computational cost of our RCS and OTOC experiments, as well as a recent Floquet evolution experiment. While none of these meet the requirements yet for a computational application, we expect that with improved error rates, an OTOC experiment will be the first beyond-classical, useful application of a quantum processor.

SANTA CLARA, Calif., Aug. 24, 2023 (GLOBE NEWSWIRE) — NVIDIA will present at the following events for the financial community: Goldman Sachs Communacopia & Technology ConferenceTuesday, Sept. …

Large language models (LLMs), such as GPT-3 and PaLM, have shown impressive progress in recent years, which have been driven by scaling up models and training data sizes. Nonetheless, a long standing debate has been whether LLMs can reason symbolically (i.e., manipulating symbols based on logical rules). For example, LLMs are able to perform simple arithmetic operations when numbers are small, but struggle to perform with large numbers. This suggests that LLMs have not learned the underlying rules needed to perform these arithmetic operations.

While neural networks have powerful pattern matching capabilities, they are prone to overfitting to spurious statistical patterns in the data. This does not hinder good performance when the training data is large and diverse and the evaluation is in-distribution. However, for tasks that require rule-based reasoning (such as addition), LLMs struggle with out-of-distribution generalization as spurious correlations in the training data are often much easier to exploit than the true rule-based solution. As a result, despite significant progress in a variety of natural language processing tasks, performance on simple arithmetic tasks like addition has remained a challenge. Even with modest improvement of GPT-4 on the MATH dataset, errors are still largely due to arithmetic and calculation mistakes. Thus, an important question is whether LLMs are capable of algorithmic reasoning, which involves solving a task by applying a set of abstract rules that define the algorithm.

In “Teaching Algorithmic Reasoning via In-Context Learning”, we describe an approach that leverages in-context learning to enable algorithmic reasoning capabilities in LLMs. In-context learning refers to a model’s ability to perform a task after seeing a few examples of it within the context of the model. The task is specified to the model using a prompt, without the need for weight updates. We also present a novel algorithmic prompting technique that enables general purpose language models to achieve strong generalization on arithmetic problems that are more difficult than those seen in the prompt. Finally, we demonstrate that a model can reliably execute algorithms on out-of-distribution examples with an appropriate choice of prompting strategy.

Teaching an algorithm as a skill

In order to teach a model an algorithm as a skill, we develop algorithmic prompting, which builds upon other rationale-augmented approaches (e.g., scratchpad and chain-of-thought). Algorithmic prompting extracts algorithmic reasoning abilities from LLMs, and has two notable distinctions compared to other prompting approaches: (1) it solves tasks by outputting the steps needed for an algorithmic solution, and (2) it explains each algorithmic step with sufficient detail so there is no room for misinterpretation by the LLM.

To gain intuition for algorithmic prompting, let’s consider the task of two-number addition. In a scratchpad-style prompt, we process each digit from right to left and keep track of the carry value (i.e., we add a 1 to the next digit if the current digit is greater than 9) at each step. However, the rule of carry is ambiguous after seeing only a few examples of carry values. We find that including explicit equations to describe the rule of carry helps the model focus on the relevant details and interpret the prompt more accurately. We use this insight to develop an algorithmic prompt for two-number addition, where we provide explicit equations for each step of computation and describe various indexing operations in non-ambiguous formats.

|

| Illustration of various prompt strategies for addition. |

Using only three prompt examples of addition with answer length up to five digits, we evaluate performance on additions of up to 19 digits. Accuracy is measured over 2,000 total examples sampled uniformly over the length of the answer. As shown below, the use of algorithmic prompts maintains high accuracy for questions significantly longer than what’s seen in the prompt, which demonstrates that the model is indeed solving the task by executing an input-agnostic algorithm.

|

| Test accuracy on addition questions of increasing length for different prompting methods. |

Leveraging algorithmic skills as tool use

To evaluate if the model can leverage algorithmic reasoning in a broader reasoning process, we evaluate performance using grade school math word problems (GSM8k). We specifically attempt to replace addition calculations from GSM8k with an algorithmic solution.

Motivated by context length limitations and possible interference between different algorithms, we explore a strategy where differently-prompted models interact with one another to solve complex tasks. In the context of GSM8k, we have one model that specializes in informal mathematical reasoning using chain-of-thought prompting, and a second model that specializes in addition using algorithmic prompting. The informal mathematical reasoning model is prompted to output specialized tokens in order to call on the addition-prompted model to perform the arithmetic steps. We extract the queries between tokens, send them to the addition-model and return the answer to the first model, after which the first model continues its output. We evaluate our approach using a difficult problem from the GSM8k (GSM8k-Hard), where we randomly select 50 addition-only questions and increase the numerical values in the questions.

|

| An example from the GSM8k-Hard dataset. The chain-of-thought prompt is augmented with brackets to indicate when an algorithmic call should be performed. |

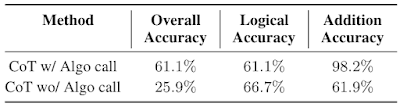

We find that using separate contexts and models with specialized prompts is an effective way to tackle GSM8k-Hard. Below, we observe that the performance of the model with algorithmic call for addition is 2.3x the chain-of-thought baseline. Finally, this strategy presents an example of solving complex tasks by facilitating interactions between LLMs specialized to different skills via in-context learning.

|

| Chain-of-thought (CoT) performance on GSM8k-Hard with or without algorithmic call. |

Conclusion

We present an approach that leverages in-context learning and a novel algorithmic prompting technique to unlock algorithmic reasoning abilities in LLMs. Our results suggest that it may be possible to transform longer context into better reasoning performance by providing more detailed explanations. Thus, these findings point to the ability of using or otherwise simulating long contexts and generating more informative rationales as promising research directions.

Acknowledgements

We thank our co-authors Behnam Neyshabur, Azade Nova, Hugo Larochelle and Aaron Courville for their valuable contributions to the paper and great feedback on the blog. We thank Tom Small for creating the animations in this post. This work was done during Hattie Zhou’s internship at Google Research.

As part of NVIDIA and Microsoft’s collaboration to bring more choice to gamers, new Microsoft Store integration has been added to GeForce NOW that lets gamers stream select titles from the Xbox PC Game Pass catalog on GeForce NOW, starting today. With the Microsoft Store integration, members will see a brand-new Xbox button on supported Read article >